Recently one of my friends in the Treehouse Writers' group alerted me to the article "Sexism in publishing: my novel wasn't the problem, it was me, Catherine" in the Guardian. You should read it, but the punchline:

In an essay for Jezebel, Nichols reveals how after she sent out her novel to 50 agents, she received just two manuscript requests. But when she set up a new email address under a male name, and submitted the same covering letter and pages to 50 agents, it was requested 17 times.

“He is eight and a half times better than me at writing the same book. Fully a third of the agents who saw his query wanted to see more, where my numbers never did shift from one in 25,” writes Nichols. “The judgments about my work that had seemed as solid as the walls of my house had turned out to be meaningless. My novel wasn’t the problem, it was me – Catherine.”

Catherine Nichols' original article is up at Jezebel under the title Homme de Plume - go check it out - but the point of raising the article was to gather people's opinions. The exchange went something like this: "Opinions?" "Outrage?"

Yes, it's outrageous, but hardly a surprise. I've heard stories like this again and again from many women writers. (Amusingly, or perhaps horrifyingly, the program I writing this in, Ecto, just spell-corrected "women writers" to "some writers," so perhaps the problem is more pervasive than I thought). Science fiction authors Andre Norton, James Tiptree, Jr., C.J. Cherryh, Paul Ashwell and CL Moore all hid their genders behind male and neutral pseudonyms to help sell their work. Behind the scenes, prejudice against women authors is pervasive - and I'm not even referring to the disparaging opinions of the conscious misogynists who'll freely tell you they don't like fiction written by women, or the discriminatory actions of the unconsciously prejudiced who simply don't buy fiction written by women, but instead calculated discrimination, sometimes on the part of women authors, editors and publishers themselves, who feel the need to hide their gender to make sure their stories sell.

I am a guy, so I've never been faced with the problem of having to choose between acknowledging or suppress my own gender in the face of the prejudices of those who would disparage my existence. (Though I have gotten a slight amount of flak for being a male paranormal romance author, we got around that by calling my work "urban fantasy," which my editor thought was a better description anyway). As a business decision, I respect any woman (or man) who chooses a pseudonym that will better market their work. My friend Trisha Wooldridge edits under Trisha Wooldridge, but writes under T. J. Wooldridge, not because publishers won't buy it, but because her publisher believes some of the young boys to whom her YA is aimed are less likely to read books by female authors. The counterexample might be J. K. Rowling, but even she is listed as J. K. Rowling and not Joanne because her publishers were worried young boys wouldn't buy their books. She's made something like a kabillion dollars under the name J. K. Rowling, so that wasn't a poor business decision (interestingly, Ecto just spell-corrected "decision" to "deception") but we'll never know how well she would have done had the Harry Potter series been published under the name "Joanne Rowling".

And because we'll never know, I feel it's high time that female authors got known for writing under their own names.

Now, intellectual honesty demands I unload a bit of critical thinking that's nagging at me. In this day and age, when we can't trust anything on the Internet, when real ongoing tragedies are muddled by people writing and publishing fake stories to push what would be otherwise legitimate agendas for which there's already enough real horrific evidence - I'm looking at you, Rolling Stone - we should always get a nagging feeling about this kind of story: a story where someone complains that the system is stacked against them. For example, in Bait and Switch Barbara Ehrenreich tried to expose the perils of job hunting … by lying about her resume, and then writing a book about how surprised she was she didn't get hired by any of the people she was lying to. (Hint, liars, just because it's not socially acceptable to call someone a liar doesn't mean we're not totally on to you - and yes, I mean you, you personally, the individual(s) who are lying to me and thinking they're getting away with it because I smile and nod politely.)

In particular, whenever someone complains that they're having difficulty getting published, there always (or should be) this nagging suspicion in the back of your mind that the problem might be with the material, not the process - according to legend, one SF author who was having trouble getting published once called up Harlan Ellison (yes, THAT Harlan Ellison) and asked why he was having trouble getting published, to which Harlan responded, "Okay, write this down. You ready? You aren't getting published because your stories suck. Got it? Peace out." Actually, Harlan probably didn't say "peace out," and there may have been more curse words or HARSH TONAL INFLECTIONS that I simply can't represent typographically without violating the Peace Treaty of Unicode. So there's this gut reaction that makes us want to say, "so what if someone couldn't get published?"

But, taking her story at face value, what happened with Catherine Nichols was the precise opposite of what happened to Barbara Ehrenreich. When she started lying about her name, which in theory should have made things harder for her … she instead started getting more responses, which makes the prejudice against her seem even stronger. Even the initial situation she was in - getting rejections from over 50 publishers and agents - is something that happens over and over again in the history of publishing … but sooner or later, even the most patient stone is worn away. Legendary writing teacher John Gardner had a similar thought: "The writer sends out, and sends again, and again and again, and the rejections keep coming, whether printed slips or letters, and so at last the moment comes when many a promising writer folds his wings and drops." Or, in Nichols' own words:

To some degree, I was being conditioned like a lab animal against ambition. My book was getting at least a few of those rejections because it was big, not because it was bad. George [her pseudonym], I imagine, would have been getting his “clever”s all along and would be writing something enormous now. In theory, the results of my experiment are vindicating, but I feel furious at having spent so much time in that ridiculous little cage, where so many people with the wrong kind of name are burning out their energy and intelligence. My name—Catherine—sounds as white and as relatively authoritative as any distinctly feminine name could, so I can only assume that changing other ethnic and class markers would have even more striking effects.

So we're crushing women writers … or worse, pre-judging their works. The Jezebel article quotes Norman Mailer:

In 1998, Prose had dubbed bias against women’s writing “gynobibliophobia”, citing Norman Mailer’s comment that “I can only say that the sniffs I get from the ink of the women are always fey, old-hat, Quaintsy Goysy, tiny, too dykily psychotic, crippled, creepish, fashionable, frigid, outer-Baroque, maquillé in mannequin’s whimsy, or else bright and stillborn”.

Now, I don't know what Mailer was sniffing, but now that the quote is free floating, let me just say that if he can cram the ink from Gertrude Stein, Ayn Rand, Virginia Woolf, Jane Austen, Emily Dickinson, Patricia Briggs, Donna Tartt, Agatha Christie, J. K. Rowling and Laurell Hamilton into the same bundle of fey, old-hat smells, he must have a hell of a nose.

But Mailer's quote, which bins an enormous amount of disparate reactions into a single judgment, looks like a textbook example of unconscious bias. As Malcolm Gladwell details in Blink, psychological priming prior to an event can literally change our experience of it: if I give you a drink in a Pepsi can instead of a Coke can, your taste experience will be literally different even if it's the same soda. This seems a bit crazy, unless you change the game a bit further and make the labels Vanilla Pepsi and Coke Zero: you can start to see that how the same soda could seem flat if it lacks an expected flavor, or too sweet if you are expecting an artificial sweetener. These unconscious expectations can lead to a haloing effect, where if you already think someone's a genius, you're more likely to credit them with more genius, when in someone else it may seem eccentricity or arrogance. The only solution to this kind of unconscious bias, according to Gladwell, is to expose yourself to more and more of the unfamiliar stimulus, so that it seems natural, rather than foreign.

So I feel it's high time not only that female authors should feel free to write under their own names, but also that the rest of us should feel free to start reading them.

I'm never going to tell someone not to use a pseudonym. There are a dozen reasons to do it, from business decisions to personal privacy to exploring different personas. There's something weirdly thrilling about Catherine Nichols' description of her male pseudonym, her "homme de plume," whom she imagined “as a sort of reptilian Michael Fassbender-looking guy, drinking whiskey and walking around train yards at night while I did the work.”

But no-one should have to hide their gender just to get published. No-one, man or woman; but since women are having most of the trouble, that's where our society needs to do most of its work. Or, to give (almost) the last word to Catherine:

The agents themselves were both men and women, which is not surprising because bias would hardly have a chance to damage people if it weren’t pervasive. It’s not something a few people do to everyone else. It goes through all the ways we think of ourselves and each other.

So it's something we should all work on. That's your homework, folks: step out of your circle and read something different.

-the Centaur

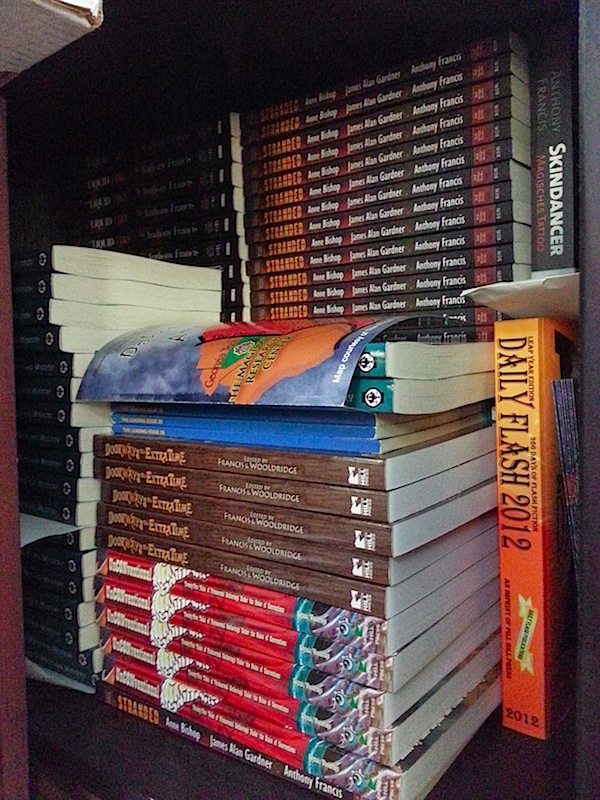

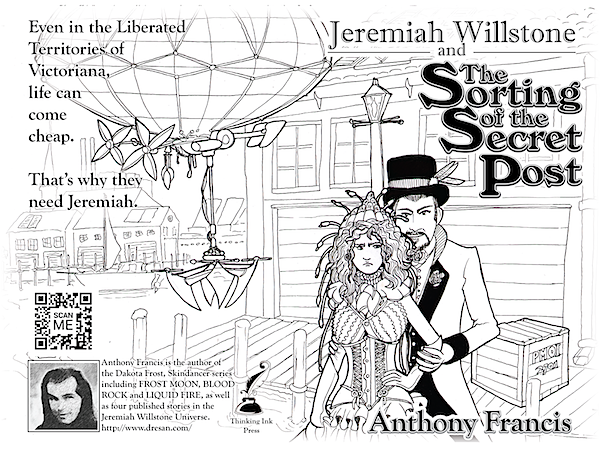

Pictured: Some art by my wife, Sandi Billingsley, who thinks a lot about male and female personas and the cages we're put in.