I can be such an idiot sometimes ... or, put in other words, the right way to solve a problem is often much, much easier than the wrong way.

For example, if you're doing woodworking, you may use a modern steel clamp to hold a part tight to work on it. That sounds good and does the job. Of course, when you need to change the position of the part you must unscrew it, reposition the part and rescrew the clamp.

So far, so good ... but, according to

David Petersen, the author of

Mouse Guard, there is a better way. Petersen researched medieval woodworking equipment for his Eisner-award winning comic and found there was a simpler scheme involving a foot pedal and a lever, which had equal gripping power but could release and reapply pressure in seconds just by lifting your foot.

Moral: newer and more complex is not always better.

Fast forward eight hundred and fifty years. Robert Kroese, a colleague at the Search Engine That Starts With A G, has his own book that he's working on, and an associated web site

Mercury Falls. On that site he has a form to enter an email list, and I thought, what a great idea! I should have a form where someone can send me an email list on the

Dakota Frost site.

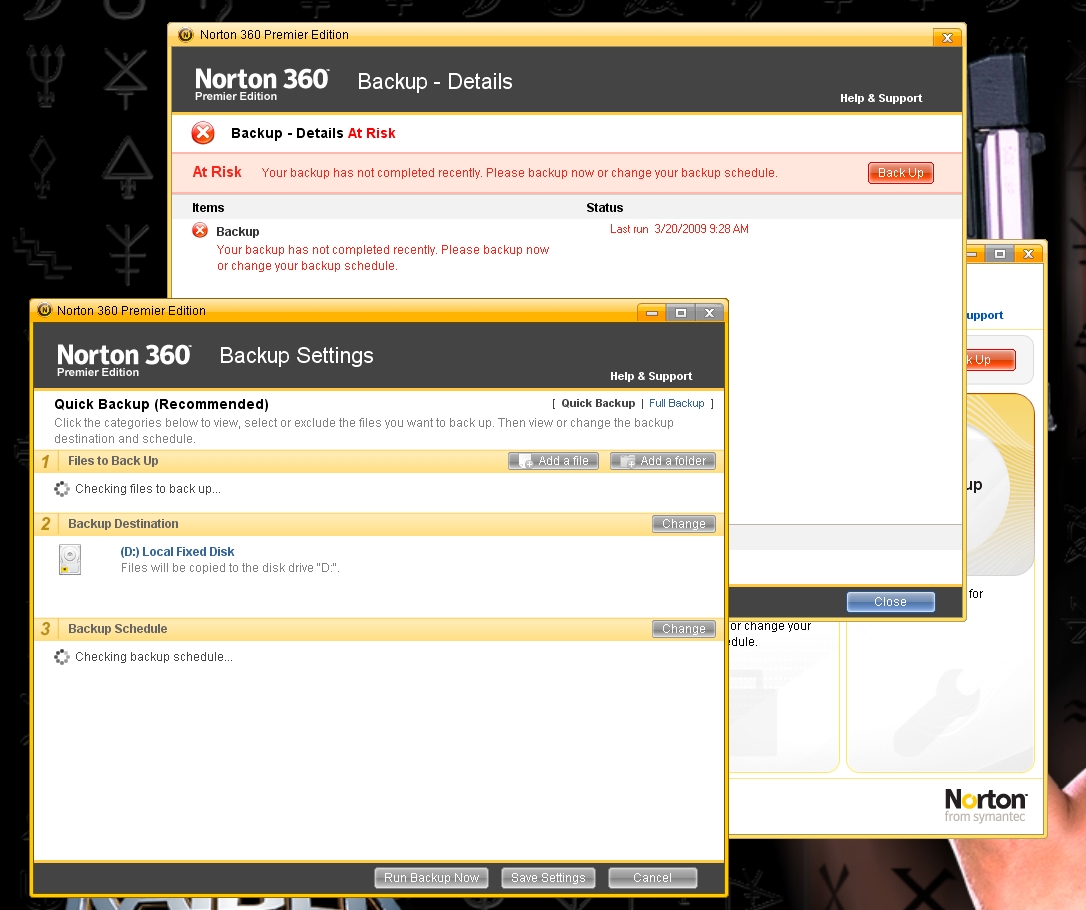

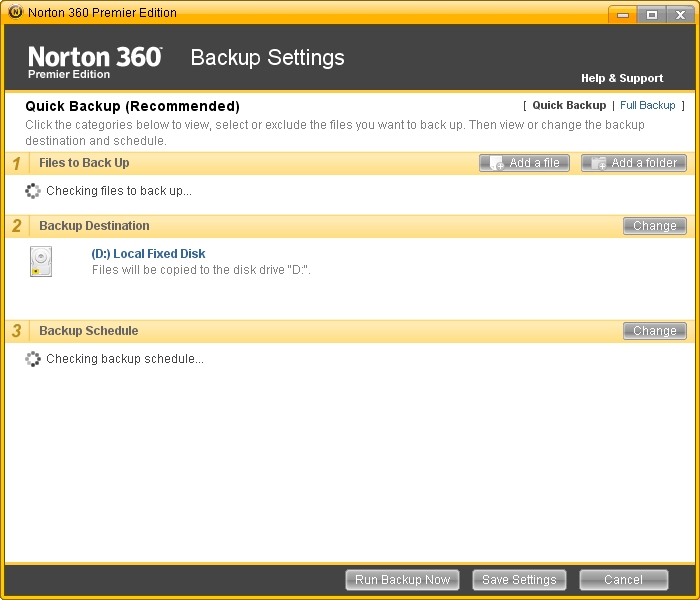

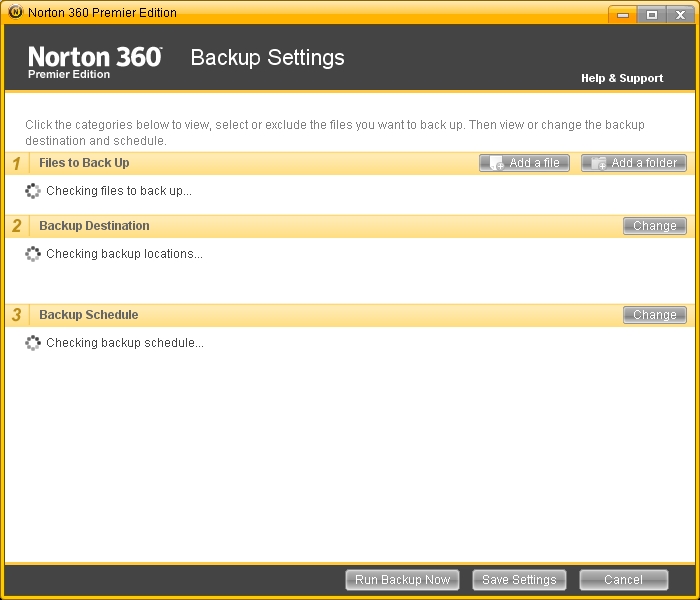

So I started looking into it. To make the form work, you need not only a web form, which is easy to set up, but also some kind of server program on the back end which can accept the results of the form and a database to store it.

Historically, I've had bad luck with scripts and databases on my web sites: Earthlink / Mindspring basically welched on the scripting features of their web hosting that I was paying for, and my next provider, Tophosting, screwed up one of my databases.

So I was hesitant, and I started thinking. Then it hit me...

... there was a simpler way.

Instead of creating a form and the backend plumbing that goes with it, I should use the existing plumbing I had to achieve the same effect. What plumbing was already in place? A web site, a hosting provider, an ability to forward emails to a given address ... and a mail client with filters.

To make this work, I went to the GoDaddy control panel for Dakota Frost and set up a forwarding email: contact at dakota frost dot com. I had that sent to one of my catchall email accounts, and in Gmail I then set up a filter which collected all those email addresses into a single folder. Bam: problem solved.

Even if I want to do something more complex, this solution still works, as long as I keep looking at simple tools that are already available. For example, if I want an official email address list as a separate file, I could always download those email messages to the mail client of my choice, filter the messages to a folder, and grep over the email addresses in the file. For the scale at which I need to do it right now, the problem is still solved.

Moral of the story: the more you overthink the plumbing, the easier it is to stop up the drain. Keep it simple, and things should just keep flowing without effort.

Or, to translate this back into development speak: there are two kinds of solutions: solutions which are easy to think up, but take a lot of coding effort to make work, and solutions which require thought, but which can be implemented in staggeringly small amounts of code.

In this one, we have an extreme example: to make this problem work the "no thinking way" would require an HTML form, a CGI script, a database, and considerable configuration on the server side of my hosting provider. To make this problem work the "no effort way" required some thought, but in the end

less configuration of my hosting provider and a few minutes setting up some email filters.

You see the same thing in software libraries: really good libraries don't take a lot of code, but that doesn't mean that they didn't take a lot of work. What happened behind the scenes was a lot of thought, wherein the library author searched the space of possible designs until he found a great one before ever publishing the library. You as the consumer don't see that effort, no matter how short or long it took: you only see the pure, parsimonious, elegant efficient piece of code that remains.

If you don't put thought into what you're doing, you might try it sometime. You'd be surprised how little thought can get you substantially improved results.

-the Centaur