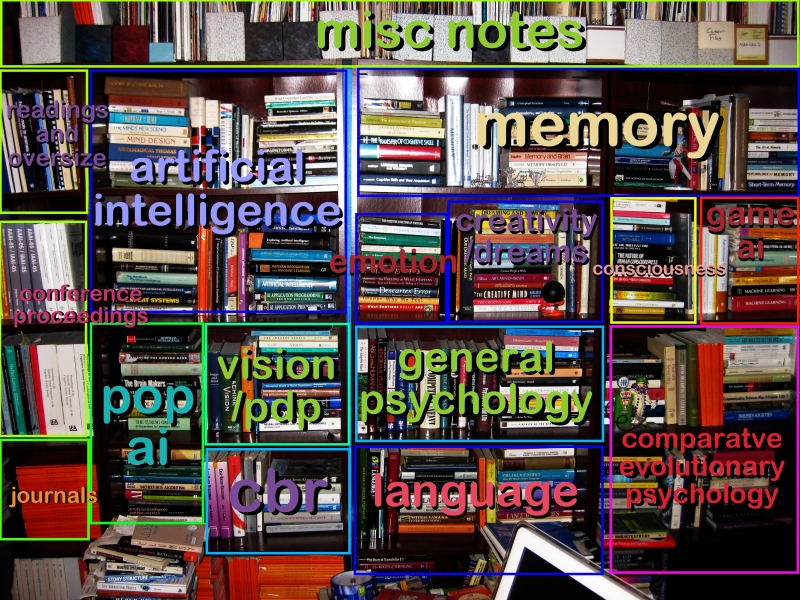

As a teenager I used to play OGRE and GEV, the quintessential microgames produced by Steve Jackson featuring cybernetic tanks called OGREs facing off with a variety of lesser tanks. For those that don't remember those "microgames", they were sold in small plastic bags or boxes, which contained a rulebook, map, and a set of perforated cardboard pieces used to play the game. After playing a lot, we extended OGRE by creating our own units and pieces from cut up paper; the lead miniature you see in the pictures came much later, and was not part of the original game.

In OGRE's purest form, however, one OGRE, a mammoth cybernetic vehicle, faced off with a dozen or so more other tanks firing tactical nuclear weapons ... and thanks to incredible firepower and meters of lightweight BCP armor, it would just about be an even fight. Below you see a GEV (Ground Effect Vehicle) about to have a very bad day.

OGREs were based (in part) on the intelligent tanks from Keith Laumer's Bolo series, but there was also an OGRE timeline that detailed the development of the armament and weapons that made tank battles make sense in the 21st century. So there was a special thrill playing OGRE: I got to relive my favorite Keith Laumer story, in which one decommissioned, radioactive OGRE is accidentally reawakened and digs its way out of its concrete tomb to continue the fight. (The touching redemption scene in which the tank is convinced not to lay waste to the countryside by its former commander were, sadly, left out of the game mechanics of Steve Jackson's initial design).

But how realistic are tales of cybernetic tanks? AI is famous for overpromising and underdelivering: it's well nigh on 2010, and we don't have HAL 9000, much less the Terminator. But OGRE, being a boardgame, did not need to satisfy the desires of filmmakers to present a near-future people could relate to; so it did not compress the timeline to the point of unbelievability. According to the Steve Jackson OGRE chronology the OGRE Mark I was supposed to come out in 2060. And from what I can see, that date is a little pessimistic. Take a look at this video from General Dynamics:

[youtube=http://www.youtube.com/watch?v=jCAiQyuWfOk]

It even has the distinctive OGRE high turret in the form of an automated XM307 machine gun. Scary! Admittedly, the XUV is a remote controlled vehicle and not a completely automated battle tank capable of deciding our fate in a millisecond. But that's not far in coming... General Dynamics is working on autonomous vehicle navigation, and they're not alone. Take a look at Stanley driving itself to the win of the Darpa Grand Challenge:

[youtube=http://www.youtube.com/watch?v=LZ3bbHTsOL4]

Now, that's more like it! Soon, I will be able to relive the boardgames my youth in real life ... running from an automated tank ... hell-bent on destroying the entire countryside ...

Hm.

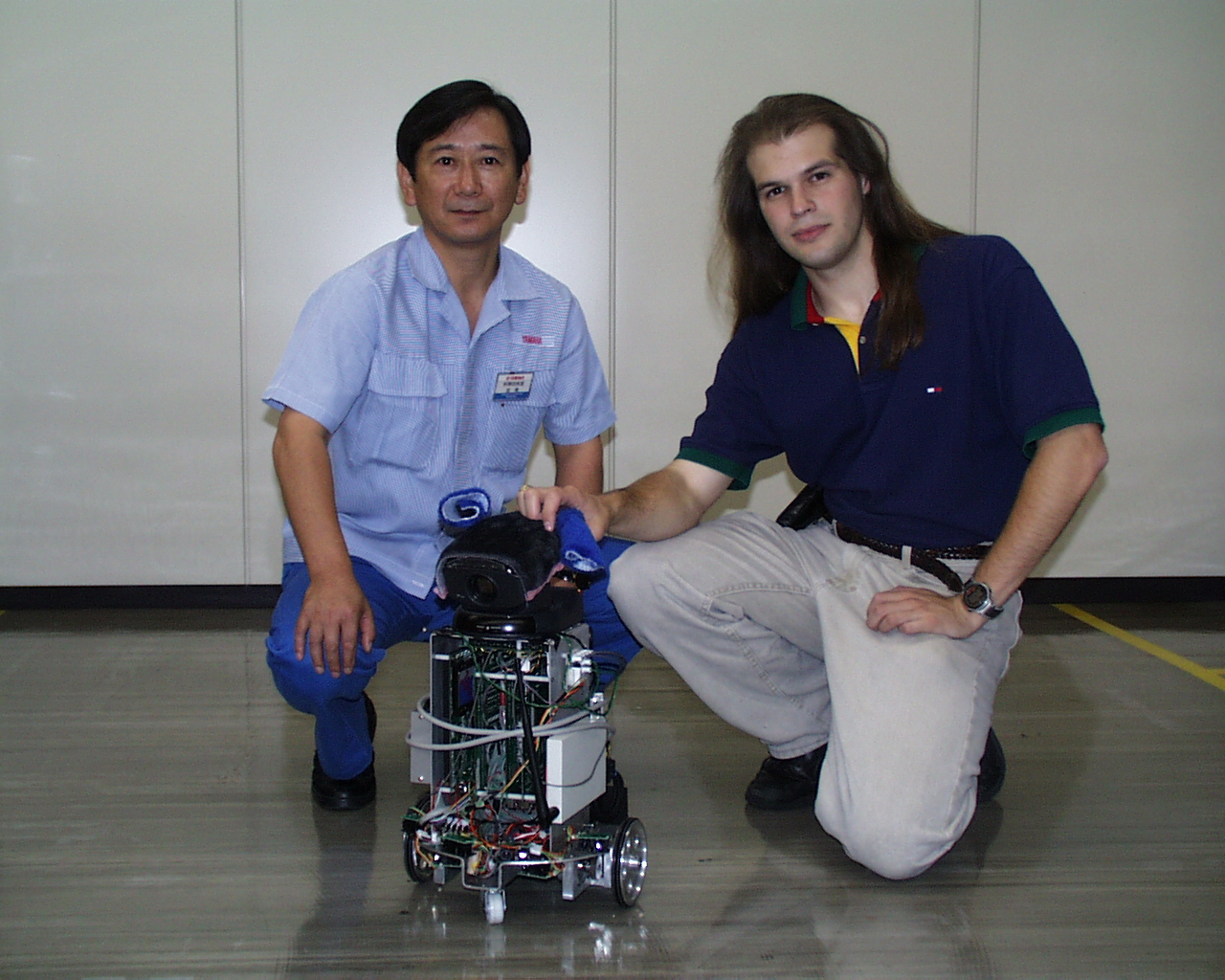

Somehow, that doesn't sound so appealing. I have an idea! Instead of building killer death-bots, why don't we try building some of these instead (full disclosure: I've worked in robotic pet research):

[youtube=http://www.youtube.com/watch?v=NKAeihiy5Ck]

Oh, wait. The AIBO program was canceled ... as was the XM307. Stupid economics. It's supposed to be John Connor saving us from the robot apocalypse, not Paul Krugman and Greg Mankiw.

-the Centaur

Pictured: Various shots of OGRE T-shirt, book, rules, pieces, and miniatures, along with the re-released version of the OGRE and GEV games. Videos courtesy Youtube.