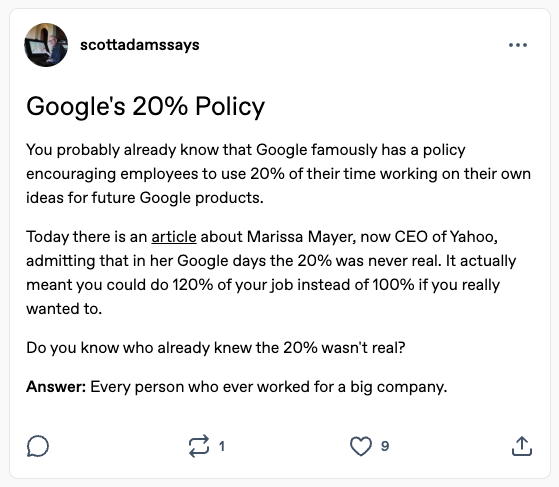

Recently I came across the above tweet (tumble, post, whatevz) from Scott Adams dissing Google's 20% time policy and linking to an article quoting Marissa Meyer's purported "debunking" of 20% time.

As usual, Scott, and Marissa, don't know what the fuck they're talking about.

First, Scott.

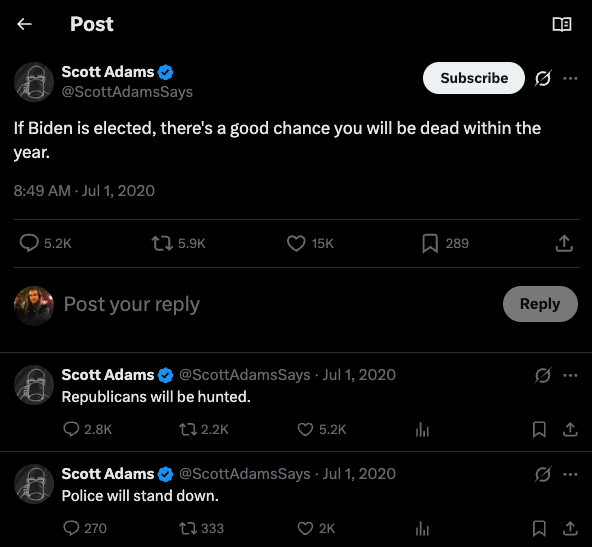

Scott Adams spent 16 years working in big businesses, and hated it so much that he heroically burned the midnight (well, 4am) oil for several years, ultimately creating the beloved, insightful and world-renowned Dilbert cartoon upon which his reputation rests. Then Scott spun off into other political and philosophical ventures, some of which turned out well (such as his successful analysis of and prediction of the success of Donald Trump's first term) and others which did not (such as imagining that there was a "good chance Republicans be dead within a year if Joe Biden won the 2020 presidential election". But hey, he's a humorist, right, it's satirical, right, and not some motte-and-bailey play, right? Right?)

Scott, you've been right about many things, though I sincerely hope you were wrong about your illness and its unfavorable prognosis. I hope your prognosis improves and you get access to all the treatments that you and your doctors want, and that they are effective in improving your longevity and quality of life.

But about Google, you spent 16 years at banks and telecoms back in the last millennium. I have spent the past 26 years in the startup and dot-com space, including 17 years at Google, longer than your entire big-business career as reported. Your information is stale, your direct knowledge of Google's internals is virtually nonexistent, and so your arguments are invalid.

Now, Marissa.

Marissa Mayer was an executive at Google known in the Valley for "always ending meetings on time". Well, it turns out, her actual quote was "stick to the clock", which makes a little bit more sense in terms of flexibility, but still isn't accurate, because when she was meeting in the conference room directly across from my office in Building 43 of the Googleplex, she almost never ended meetings on time.

Marissa's meetings running over happened so often it got to be a joke, until it wasn't. The teams in nearby offices learned to try to schedule meetings in other conference rooms in case Marissa or another VP was running over. Until I, meeting with two Google New York visitors, had the uncomfortable experience of the two of them barging into the room where Marissa was still finishing up her meeting 5 minutes after the hour, not knowing that she was a VP, and just knowing that she was rude. Well, I guess they showed her.

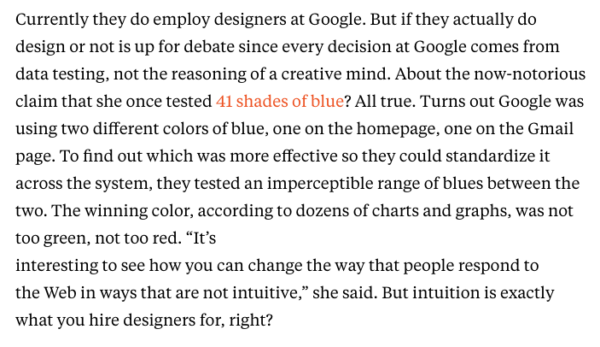

Now, I could pick on her continued lateness at Yahoo, or her inappropriate focus on micro-details of user interface design - such as the rumor that she once tested 41 shades of blue on the Google home page. Now, if you don't know how statistics work, you might think that's data-driven design; however, if you do know how statistics work, you know that the test-retest reliability of different shades of blue in a complex user interface exposed to millions of users is likely to be very low over any appreciable span of time, and that Marissa was wasting engineer's time and Google's money just chasing noise.

But what I really want to pick on is her comments about 20% time.

Marissa, I'm sorry, but I don't have as much good to say about you as I do about Scott. I'm genuinely sorry your stint at Yahoo didn't work out, but to all external appearances it's a direct consequence of the toxic environment you helped create in the teams you worked with at Google. This goes beyond creating a hostile relationship between user interface and software engineering, something I had to contend with long after you left the company; this goes beyond pursuing a quixotic attention to micro-detail that is directly contradicted by researchers at Google itself (admittedly, long after you left).

It even goes beyond your toxic perfectionism, repeatedly killing development projects internally because their additions to the search results didn't reach some absurdly high degree of accuracy; this helped foster a Google-wide attitude of caution that meant internal teams couldn't develop certain products, and we had to buy external companies like (the very nice) Metaweb for millions upon millions of dollars - but hey, guess what? The external systems we acquired also didn't reach the same absurdly high degree of accuracy, and if we had just let our internal teams develop shit and iterate to perfect it, we would have built more, internally, and cheaper, with a more harmonious and less stressful internal culture.

No, it's because you don't know what the fuck you're talking about about how Google works. You worked for Google for 13 years, but I worked for Google for 17 years, and in the six years we overlapped at the company plus the previous year in which I was recruited, the perception you apparently acquired of how Google worked was directly contradicted by the available evidence, so your arguments are invalid.

Now.

Google's 20% time.

Google's 20% time, in case you don't know, enabled Google employees to spend up to one fifth of their time working on a personal project. It had to be for the company and your manager had to improve, but otherwise it was flexible. Google recruiters directly advertised 20% time as one of the perks of being at Google. I was allowed to directly interview Google employees who confirmed that it existed, though at least one of them said that they were so interested in their main project that they had no time for 20% efforts. When I joined Google, as far as I can recall, every manager I ever had was supportive of 20% time, and every team that I was on, and many of the teams that surrounded us, always had at least one person working on a 20% project, some of them quite substantial. I myself worked on a fair number of 20% projects. Most importantly, it was never something that you had to work 120% time to do in all the time I worked there.

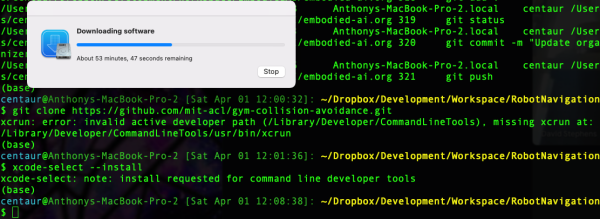

On that point, most notably, robotics at Google began, as far as I personally know, at the 2010 Robotics 20% Taskforce, when about 20 engineers, user interface designers, and product managers pooled their banked up 20% time and got together for a couple of weeks to prototype robotics systems. That led to an early "Cloud Robotics" team robotics team that formed in late 2010 or early 2011, first presenting its work publicly at Google I/O in 2011. That project didn't survive, but the team did, and many of its alumni went on to other Robotics projects at Google, notably Replicant and later Robotics at Google.

During my time there, Google was heterogeneous in both time and space. There were many individuals, managers, teams and divisions that did not participate in or support 20% time. And there were many times that teams that did support it were engaged in full court press work that didn't leave time for 20% work.

But 20% time was an important part of most teams that I worked at and most teams that I worked with during my 17 years at the company, and while there were a few skeptics, it remained an important part of the company culture during my entire time there, making key contributions to Ads, News, and Robotics. As far as I know, it was still part of the company culture right up until when I was laid off in 2023. After that, the people I know working at Google are all in Google Gemini and are way too busy, so, who knows. But the layoffs and Gemini happened way after Scott's and Marissa's comments in 2015, so it isn't pertinent.

Or, put another way ... Marissa Mayer and Scott Adams didn't know what the fuck they were talking about when they tried to "debunk" Google's 20% time.

-the Centaur

P.S. The Wikipedia page on Google's implementation of "Side Project Time" says [citation needed] to "The creator of [Google News] was Krishna Bharat, who developed this software in his dedicated project time.

Well, you can fucking cite me and this blog post. Krishna Bharat was my second manager at Google, and he told me directly in one of our 1-1 conversations that he created Google News as a bunch of Perl scripts following the 9/11 attacks to help him keep up with the headlines. Krishna was a master of spinning up small things into something big, and turned that humble beginning into the product that became the world's largest news aggregator. I don't remember whether he mentioned it was developed in what we later called 20% time, but it wasn't his primary responsibility, Google obviously supported and encouraged his work on it, and the entire arc of his side work and subsequent development is precisely consistent with the use of 20% project time that made Google one of the most vibrant and creative companies in history.

So, today's exercise was something very difficult for me: abandoning a failed rough and starting over.

You see, many artists that I know will get sucked into perfecting a drawing that has some core flaw in its bones - this is something I ran into with my Batman cover page. I know one artist who has worked over a handful of difficult paintings for literally 2-3 years ... but who can produce dozens of new paintings for a show on the drop of a hat. But it's hard emotionally to let go the investment in a partially finished piece.

This is tied up with the

So, today's exercise was something very difficult for me: abandoning a failed rough and starting over.

You see, many artists that I know will get sucked into perfecting a drawing that has some core flaw in its bones - this is something I ran into with my Batman cover page. I know one artist who has worked over a handful of difficult paintings for literally 2-3 years ... but who can produce dozens of new paintings for a show on the drop of a hat. But it's hard emotionally to let go the investment in a partially finished piece.

This is tied up with the  I started what I intended to be a quick sketch, and got partway into the roughs ...

I started what I intended to be a quick sketch, and got partway into the roughs ...

... when I decided that the shape of the face was off - and the proportions of the arm were even further off. I started to fix it - you can see a few doubled features like eyes and lips in there - but I decided - ha, decided - no, stop, STOP Anthony, this rough is too far gone.

Start over, and look more closely at what you see this time.

That led to the drawing at the top of the entry. There were still problems with the finished piece - I am continuing to have trouble with tilting heads the wrong way, and something went wrong with the shape of the arm, leading to a too-narrow, too-long wrist - but the bones of the sketch were so much better than the first attempt that it was easy to finish the drawing.

And thus, keep up drawing every day.

-the Centaur

(1) I'm not bitter.

... when I decided that the shape of the face was off - and the proportions of the arm were even further off. I started to fix it - you can see a few doubled features like eyes and lips in there - but I decided - ha, decided - no, stop, STOP Anthony, this rough is too far gone.

Start over, and look more closely at what you see this time.

That led to the drawing at the top of the entry. There were still problems with the finished piece - I am continuing to have trouble with tilting heads the wrong way, and something went wrong with the shape of the arm, leading to a too-narrow, too-long wrist - but the bones of the sketch were so much better than the first attempt that it was easy to finish the drawing.

And thus, keep up drawing every day.

-the Centaur

(1) I'm not bitter.

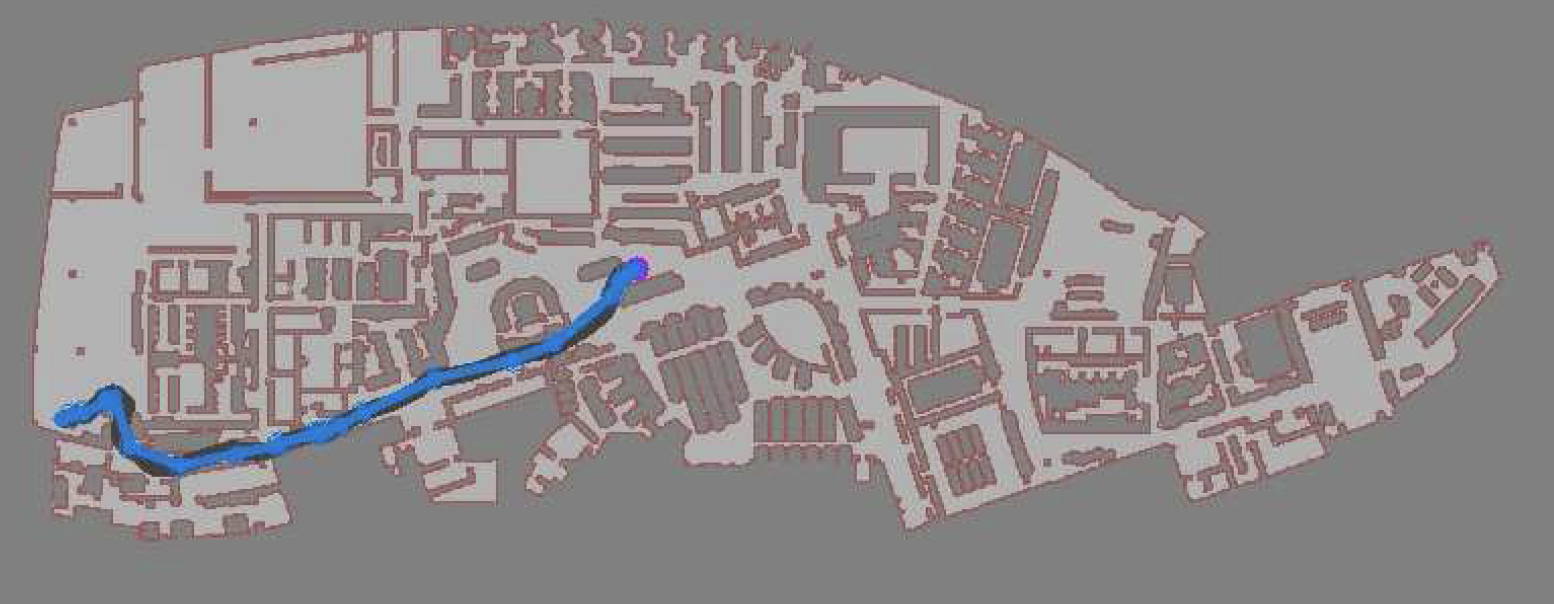

So, this happened! Our team's paper on "PRM-RL" - a way to teach robots to navigate their worlds which combines human-designed algorithms that use roadmaps with deep-learned algorithms to control the robot itself - won a best paper award at the ICRA robotics conference!

So, this happened! Our team's paper on "PRM-RL" - a way to teach robots to navigate their worlds which combines human-designed algorithms that use roadmaps with deep-learned algorithms to control the robot itself - won a best paper award at the ICRA robotics conference!

I talked a little bit about how PRM-RL works in the post "

I talked a little bit about how PRM-RL works in the post " We were cited not just for this technique, but for testing it extensively in simulation and on two different kinds of robots. I want to thank everyone on the team - especially Sandra Faust for her background in PRMs and for taking point on the idea (and doing all the quadrotor work with Lydia Tapia), for Oscar Ramirez and Marek Fiser for their work on our reinforcement learning framework and simulator, for Kenneth Oslund for his heroic last-minute push to collect the indoor robot navigation data, and to our manager James for his guidance, contributions to the paper and support of our navigation work.

We were cited not just for this technique, but for testing it extensively in simulation and on two different kinds of robots. I want to thank everyone on the team - especially Sandra Faust for her background in PRMs and for taking point on the idea (and doing all the quadrotor work with Lydia Tapia), for Oscar Ramirez and Marek Fiser for their work on our reinforcement learning framework and simulator, for Kenneth Oslund for his heroic last-minute push to collect the indoor robot navigation data, and to our manager James for his guidance, contributions to the paper and support of our navigation work.

Woohoo! Thanks again everyone!

-the Centaur

Woohoo! Thanks again everyone!

-the Centaur