So, this happened! Our team’s paper on “PRM-RL” – a way to teach robots to navigate their worlds which combines human-designed algorithms that use roadmaps with deep-learned algorithms to control the robot itself – won a best paper award at the ICRA robotics conference!

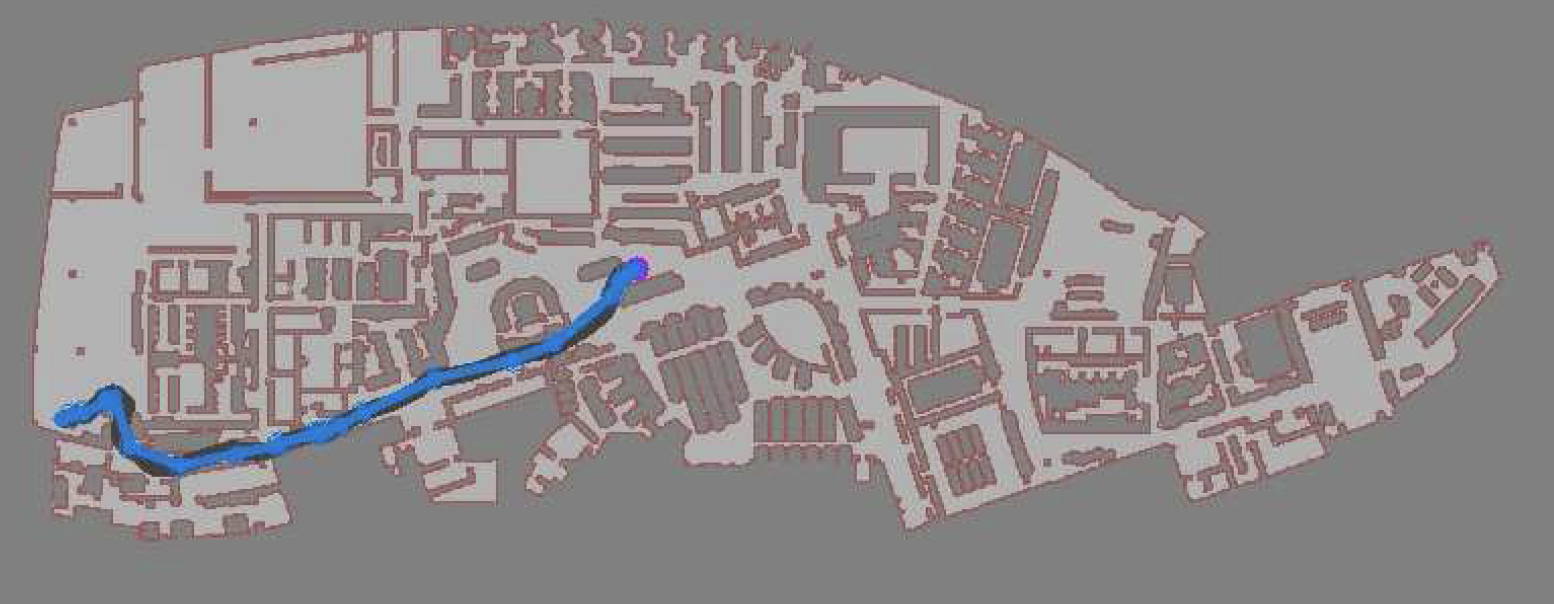

I talked a little bit about how PRM-RL works in the post “Learning to Drive … by Learning Where You Can Drive“, so I won’t go over the whole spiel here – but the basic idea is that we’ve gotten good at teaching robots to control themselves using a technique called deep reinforcement learning (the RL in PRM-RL) that trains them in simulation, but it’s hard to extend this approach to long-range navigation problems in the real world; we overcome this barrier by using a more traditional robotic approach, probabilistic roadmaps (the PRM in PRM-RL), which build maps of where the robot can drive using point to point connections; we combine these maps with the robot simulator and, boom, we have a map of where the robot thinks it can successfully drive.

We were cited not just for this technique, but for testing it extensively in simulation and on two different kinds of robots. I want to thank everyone on the team – especially Sandra Faust for her background in PRMs and for taking point on the idea (and doing all the quadrotor work with Lydia Tapia), for Oscar Ramirez and Marek Fiser for their work on our reinforcement learning framework and simulator, for Kenneth Oslund for his heroic last-minute push to collect the indoor robot navigation data, and to our manager James for his guidance, contributions to the paper and support of our navigation work.

Woohoo! Thanks again everyone!

-the Centaur

[…] built intelligent machines. I’ve worked on emotional robots. I’ve written and published science fiction. But the […]

[…] to get started, my first novel, FROST MOON, won an EPIC Ebook award, and my team’s work on PRM-RL won the ICRA 2018 Best Paper Award. Otherwise, I hope while you are here in the Library that you find something informative, […]