Original Sin is the idea that all humans are irretrievably flawed by one bad decision made by Adam in the Garden of Eden. One bite of that apple (well, it wasn’t an apple, but nevermind), broke Creation in the Fall, corrupted everyone’s souls from birth, leading to the requirement of baptism to liberate us.

But the Fall didn’t happen. The universe is not broken, but is unimaginably old and vast. The evolution of humans on the earth is one story out of myriads. The cosmology of the early Hebrews recorded in Genesis is myth – myth in the Catholic sense, a story, not necessarily true, designed to teach a lesson.

What lessons does Genesis teach, then?

Well, first off, that God created the universe; that it is well designed for life; that humanity is an important part of that creation; and that humans are vulnerable to temptation. Forget the Fall: the story of the serpent shows that humans out of the box can make shortsighted decisions that go horribly wrong.

But what’s the cause of this tendency to sin, if it isn’t a result of one bad choice in the Fall? The answer is surprisingly deep: it’s a fundamental flaw in the decision making process, a mathematical consequence of how we make decisions in a world where things change as a result of our choices.

Artificial intelligence researchers often model how we make choices using Markov decision processes – the idea that we can model the world as a sequence of states – I’m at my desk, or in the kitchen, without a soda – in which we can take actions – like getting a Coke Zero from the fridge – and get rewards.

Ah, refreshing.

Markov decision processes are a simplification of the real world. They assume time steps discretely, that states and actions are drawn from known sets, and the reward is a number. Most important is the Markov property: the idea that history doesn’t matter: only the current state dictates the result of an action.

Despite these simplifications, Markov decision processes expose many of the challenges of learning to act in the world. Attempts to make MDP more realistic – assuming time is continuous, or states are only partially observable, or multidimensional rewards – only make the problem more challenging, not less.

Hm, I’ve finished that soda. It was refreshing. Time for another?

Good performance at MDPs is hard because we can only observe our current state: you can’t be at two places or two times at once. The graph of states of an MDP is not a map of locations you can survey, but a set of possible moments in time which we may or may not reach as a result of our choices.

In an earlier essay, I described navigating this graph like trying to traverse a minefield, but it’s worse, since there’s no way to survey the landscape. The best you can do is to enumerate the possible actions in your current state and model what might happen, like waving a metal detector over the ground.

Should I get a Cherry Coke Zero, or a regular?

This kind of local decision making is sometimes called reactive, because we’re just reacting to what’s right in front of us, and it’s also called greedy, because we’re choosing the best actions out of the information available in the current state, despite what might come two or three steps later.

If you took the wrong path in a minefield, even if you don’t get blown up, you might go down a bad path, forcing you to backtrack … or wandering into the crosshairs of the badguys hiding in a nearby bunker. A sequence of locally good actions can lead us to a globally suboptimal outcome.

Excuse me for a moment. After drinking all those sodas, I need a bio break.

That’s the problem of local decision making: if you exist in a just very slightly complicated world – say, one where the locally optimal action of having a cool fizzy soda can lead to a bad outcome three steps later like bathroom breaks and a sleepless night – then those local choices can lead you astray.

The most extreme example is a Christian one. Imagine you have two choices: a narrow barren road versus a lovely garden path. Medieval Christian writers loved to show that the primrose path led straight to the everlasting bonfire, whereas the straight and narrow led to Paradise.

Or, back to the Garden of Eden, where eating the apple gave immediate knowledge and long-term punishment, and not eating it would have kept them in good grace with God. This is a simple two-stage, two-choice Markov decision process, in which the locally optimal action leads to a worse reward.

The solution to this problem is to not use a locally greedy policy operating over the reward given by each action, but to instead model the long-term reward of sequences of actions over the entire space, and to develop a global decision policy which takes in account the true ultimate value of each action.

Global decision policies sometimes mean delaying gratification. To succeed at life, we often need to do the things which are difficult right now, like skipping dessert, in favor of getting more reward later, like seeing the numbers on your scale going back down to their pre-Covid numbers.

Global decision policies also resemble moral rules. Whether based on revelation from God, as discussed in an earlier essay, or based on the thinking of moral philosophers, or just the accumulated knowledge of a culture, our moral rules provide us a global decision policy that helps us avoid bad consequences.

The flaw in humanity which inspired Original Sin and is documented in the Book of Genesis is simply this: we’re finite beings that exist in a single point in time and can’t see the long-term outcome of our choices. To make good decisions, we must develop global policies which go beyond what we see.

Or, for a Christian, we must trust God to give us moral standards to guide us towards the good.

-the Centaur

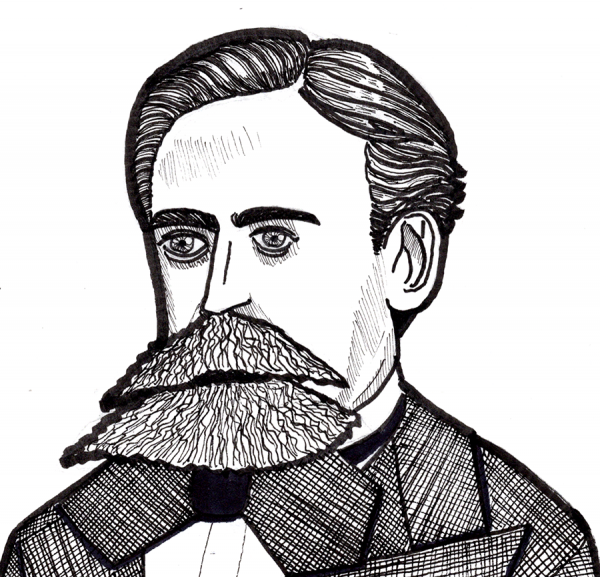

Pictured: Andrey Markov.