But I am going to take a rest for a bit.

Above you see a shot of my cat Lenora resting in front of the "To Read Science Fiction" section of my Library, the enormous book collection I've been accumulating over the last quarter century. I have books older than that, of course, but they're stored in my mother's house in my hometown. It's only over the last 25 years or so have I been accumulating my own personal library.

But why am I, if not resting, at least thinking about it? I finished organizing the books in my Library.

I have an enormous amount of papers, bills, bric a brac and other memorabilia still to organize, file, trash or donate, but the Library itself is organized, at last. It's even possible to use it.

How organized? Well...

Religion, politics, economics, the environment, women's studies, Ayn Rand, read books, Lovecraft, centaur books, read urban fantasy, read science fiction, Atlanta, read comics, to-read comics, to-read science fiction magazines, comic reference books, drawing reference books, steampunk, urban fantasy, miscellaneous writing projects, Dakota Frost, books to donate, science fiction to-reads: Asimov, Clarke, Banks, Cherryh, miscellaneous, other fiction to-reads, non-fiction to-reads, general art books, genre art books, BDSM and fetish magazines and art books, fetish and sexuality theory and culture, military, war, law, space travel, astronomy, popular science, physics of time travel, Einstein, quantum mechanics, Feynman, more physics, mathematics, philosophy, martial arts, health, nutrition, home care, ancient computer manuals, more recent computer manuals, popular computer books, the practice of computer programming, programming language theory, ancient computer languages, Web languages, Perl, Java, C and C++, Lisp, APL, the Art of Computer Programming, popular cognitive science, Schankian cognitive science, animal cognition, animal biology, consciousness, dreaming, sleep, emotion, personality, cognitive science theory, brain theory, brain philosophy, evolution, human evolution, cognitive evolution, brain cognition, memory, "Readings in …" various AI and cogsci disciplines, oversized AI and science books, conference proceedings, technical reports, game AI, game development, robotics, imagery, vision, information retrieval, natural language processing, linguistics, popular AI, theory of AI, programming AI, AI textbooks, AI notes from recent projects, notes from college from undergraduate through my thesis, more Dakota Frost, GURPS, other roleplaying games, Magic the Gathering, Dungeons and Dragons, more Dakota Frost, recent projects, literary theory of Asimov and Clarke, literary theory of science fiction, science fiction shows and TV, writing science fiction, mythology, travel, writing science, writing reference, writers on writing, writing markets, poetry, improv, voice acting, film, writing film, history of literature, representative examples, oversized reference, history, anthropology, dictionaries, thesauri, topical dictionaries, language dictionaries, language learning, Japanese, culture of Japan, recent project papers, comic archives, older project papers, tubs containing things to file … and the single volume version of the Oxford English Dictionary, complete with magnifying glass.

I deliberately left out the details of many categories and outright omitted a few others not stored in the library proper, like my cookbooks, my display shelves of Arkham House editions, Harry Potter and other hardbacks, my "favorite" nonfiction books, some spot reading materials, a stash of transhumanist science fiction, all the technical books I keep in the shelf next to me at work … and, of course, my wife and I's enormous collection of audiobooks.

What's really interesting about all that to me is there are far more categories out there in the world not in my Library than there are in my Library. Try it sometime - go into a bookstore or library, or peruse the list of categories in the Library of Congress or Dewey Decimal System Classifications. There's far more things to think about than even I, a borderline hoarder with a generous income and enormous knowledge of bookstores, have been able to accumulate in a quarter century.

Makes you think, doesn't it?

-the Centaur

Let me completely up front about my motivation for writing this post: recently, I came across a paper which was similar to the work in my PhD thesis, but applied to a different area. The paper didn’t cite my work – in fact, its survey of related work in the area seemed to indicate that no prior work along the lines of mine existed – and when I alerted the authors to the omission, they informed me they’d cited all relevant work, and claimed “my obscure dissertation probably wasn’t relevant.” Clearly, I haven’t done a good enough job articulating or promoting my work, so I thought I should take a moment to explain what I did for my doctoral dissertation.

My research improved computer memory by modeling it after human memory. People remember different things in different contexts based on how different pieces of information are connected to one another. Even a word as simple as ‘ford’ can call different things to mind depending on whether you’ve bought a popular brand of car, watched the credits of an Indiana Jones movie, or tried to cross the shallow part of a river. Based on that human phenomenon, I built a memory retrieval engine that used context to remember relevant things more quickly.

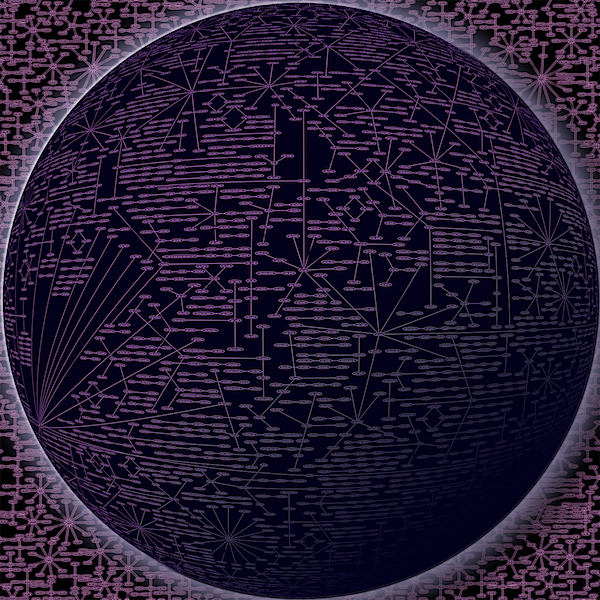

My approach was based on a technique I called context directed spreading activation, which I argued was an advance over so-called “traditional” spreading activation. Spreading activation is a technique for finding information in a kind of computer memory called semantic networks, which model relationships in the human mind. A semantic network represents knowledge as a graph, with concepts as nodes and relationships between concepts as links, and traditional spreading activation finds information in that network by starting with a set of “query” nodes and propagating “activation” out on the links, like current in an electric circuit. The current that hits each node in the network determines how highly ranked the node is for a query. (If you understand circuits and spreading activation, and this description caused you to catch on fire, my apologies. I’ll be more precise in future blogposts. Roll with it).

The problem is, as semantic networks grow large, there’s a heck of a lot of activation to propagate. My approach, context directed spreading activation (CDSA), cuts this cost dramatically by making activation propagate over fewer types of links. In CDSA, each link has a type, each type has a node, and activation propagates only over links whose nodes are active (to a very rough first approximation, although in my evaluations I tested about every variant of this under the sun). Propagating over active links isn’t just cheaper than spreading activation over every link; it’s smarter: the same “query” nodes can activate different parts of the network, depending on which “context” nodes are active. So, if you design your network right, Harrison Ford is never going to occur to you if you’ve been thinking about cars.

I was a typical graduate student, and I thought my approach was so good, it was good for everything—so I built an entire cognitive architecture around the idea. (Cognitive architectures are general reasoning systems, normally built by teams of researchers, and building even a small one is part of the reason my PhD thesis took ten years, but I digress.) My cognitive architecture was called context sensitive asynchronous memory (CSAM), and it automatically collected context while the system was thinking, fed it into the context-directed spreading activation system, and incorporated dynamically remembered information into its ongoing thought processes using patch programs called integration mechanisms.

CSAM wasn’t just an idea: I built it out into a computer program called Nicole, and even published a workshop paper on it in 1997 called “

Let me completely up front about my motivation for writing this post: recently, I came across a paper which was similar to the work in my PhD thesis, but applied to a different area. The paper didn’t cite my work – in fact, its survey of related work in the area seemed to indicate that no prior work along the lines of mine existed – and when I alerted the authors to the omission, they informed me they’d cited all relevant work, and claimed “my obscure dissertation probably wasn’t relevant.” Clearly, I haven’t done a good enough job articulating or promoting my work, so I thought I should take a moment to explain what I did for my doctoral dissertation.

My research improved computer memory by modeling it after human memory. People remember different things in different contexts based on how different pieces of information are connected to one another. Even a word as simple as ‘ford’ can call different things to mind depending on whether you’ve bought a popular brand of car, watched the credits of an Indiana Jones movie, or tried to cross the shallow part of a river. Based on that human phenomenon, I built a memory retrieval engine that used context to remember relevant things more quickly.

My approach was based on a technique I called context directed spreading activation, which I argued was an advance over so-called “traditional” spreading activation. Spreading activation is a technique for finding information in a kind of computer memory called semantic networks, which model relationships in the human mind. A semantic network represents knowledge as a graph, with concepts as nodes and relationships between concepts as links, and traditional spreading activation finds information in that network by starting with a set of “query” nodes and propagating “activation” out on the links, like current in an electric circuit. The current that hits each node in the network determines how highly ranked the node is for a query. (If you understand circuits and spreading activation, and this description caused you to catch on fire, my apologies. I’ll be more precise in future blogposts. Roll with it).

The problem is, as semantic networks grow large, there’s a heck of a lot of activation to propagate. My approach, context directed spreading activation (CDSA), cuts this cost dramatically by making activation propagate over fewer types of links. In CDSA, each link has a type, each type has a node, and activation propagates only over links whose nodes are active (to a very rough first approximation, although in my evaluations I tested about every variant of this under the sun). Propagating over active links isn’t just cheaper than spreading activation over every link; it’s smarter: the same “query” nodes can activate different parts of the network, depending on which “context” nodes are active. So, if you design your network right, Harrison Ford is never going to occur to you if you’ve been thinking about cars.

I was a typical graduate student, and I thought my approach was so good, it was good for everything—so I built an entire cognitive architecture around the idea. (Cognitive architectures are general reasoning systems, normally built by teams of researchers, and building even a small one is part of the reason my PhD thesis took ten years, but I digress.) My cognitive architecture was called context sensitive asynchronous memory (CSAM), and it automatically collected context while the system was thinking, fed it into the context-directed spreading activation system, and incorporated dynamically remembered information into its ongoing thought processes using patch programs called integration mechanisms.

CSAM wasn’t just an idea: I built it out into a computer program called Nicole, and even published a workshop paper on it in 1997 called “